Azure IoT Operations Preview is now available

Posted on: November 22, 2023Azure IoT Operations was announced at Ignite last week.

Read more about the announcement from the following blog post and official documentation pages:

Accelerating Industrial Transformation with Azure IoT Operations

Arc Jumpstart folks has been busy at work and created a new jumpstart for this topic:

Enhance operational insights at the edge using Azure IoT Operations (AIO)

So quite a lot of content is available already for this new service. Of course, I had to deploy this to my Intel NUC machine running in my living room:

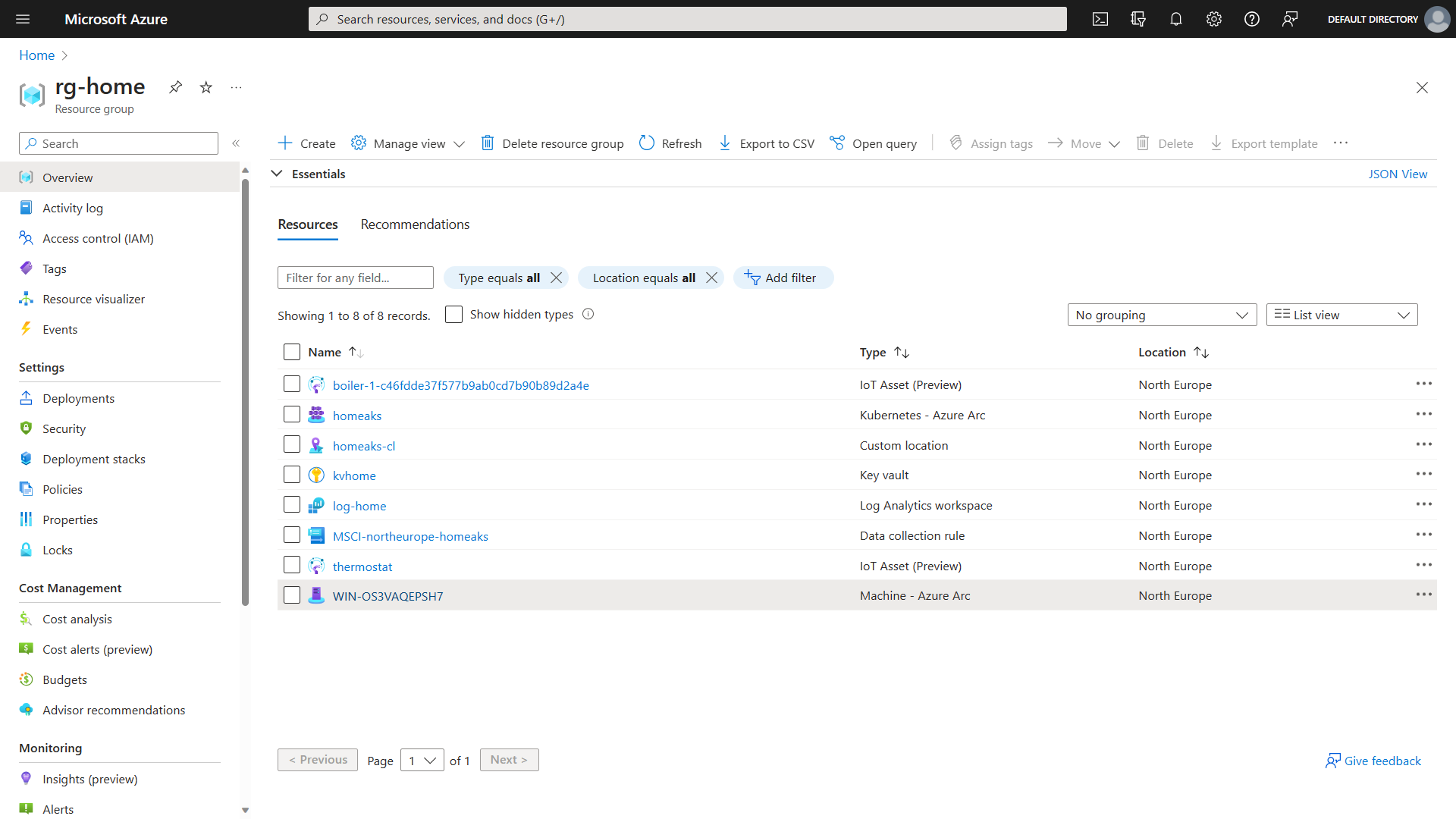

Here are my deployed resources in Azure:

Main resources from above list are:

Azure Arc-enabled servers: Intel NUC machine running Windows Server 2022.

Azure Arc-enabled Kubernetes: AKS Edge Essentials

And then new IoT Assets like thermostat and boiler.

I just want to reiterate that even if Azure IoT Operations itself is in preview, it is building on top of many other services that are already generally available (GA).

Arc-enabled servers became generally available in 2020.

AKS Edge Essentials became generally available in March 2023.

MQTT broker feature in Azure Event Grid became generally available in November 2023

You want to build your service in shoulders of giants.

Arc capabilities

It would be hard to talk about Azure IoT Operations without talking about Azure Arc. It solves many of those challenges that you’re facing when you’re building an edge solution.

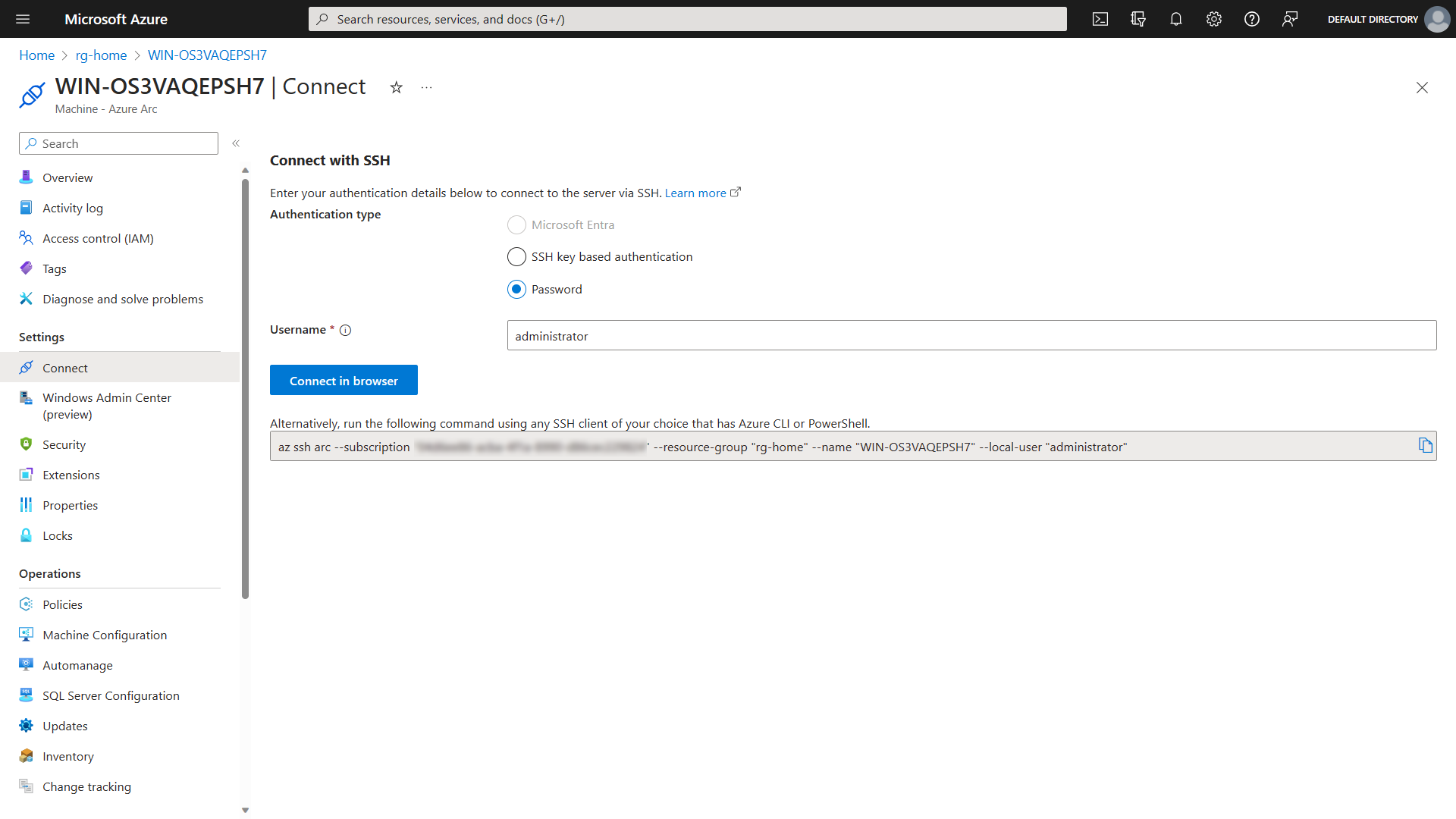

I’ll pick a few examples to show in here. First one is the connectivity options for connecting to your device. You can use SSH, Windows Admin Center and RDP to access Arc-enabled servers.

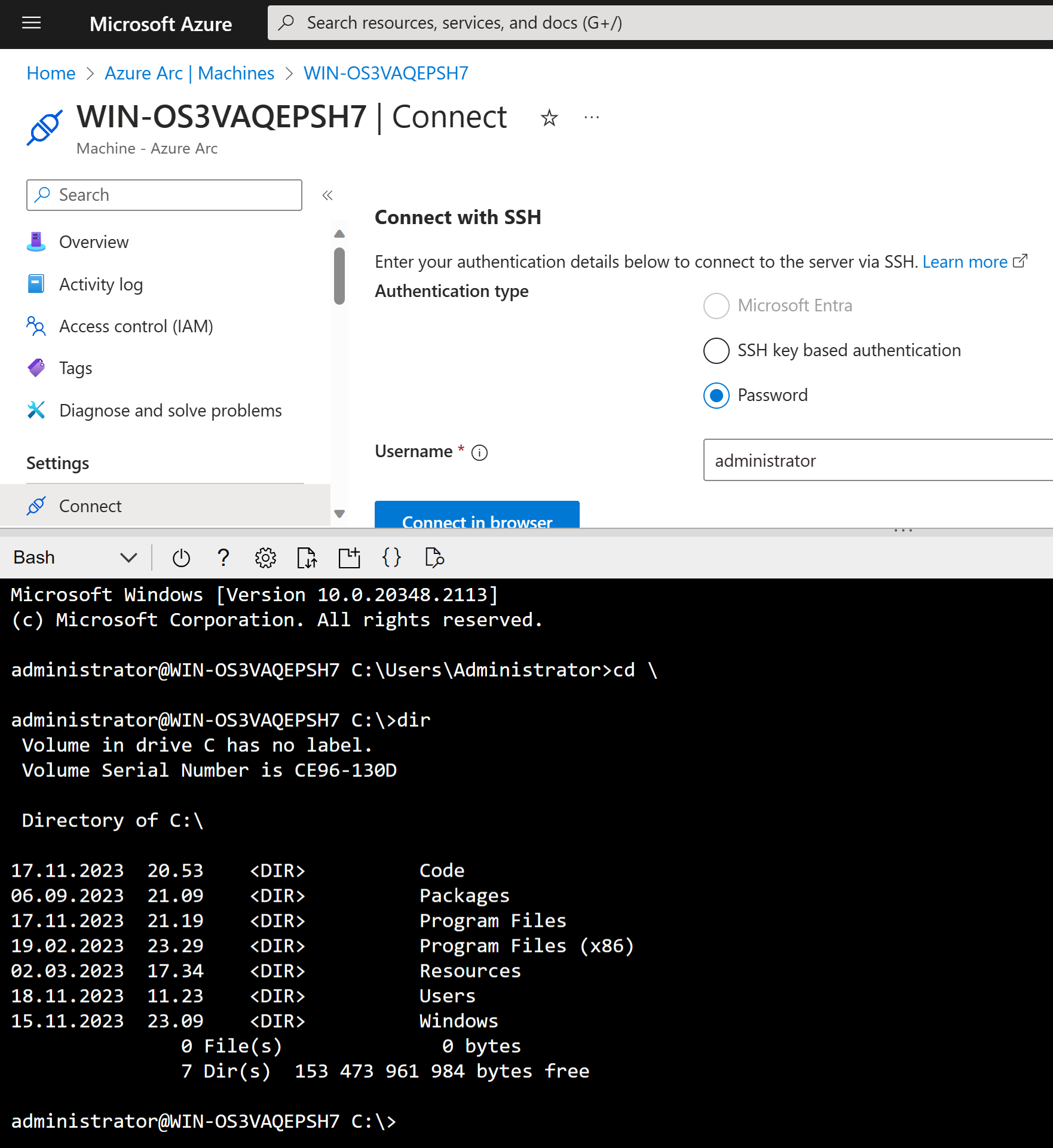

You can connect in the Portal using Cloud Shell by clicking on the Connect in browser button:

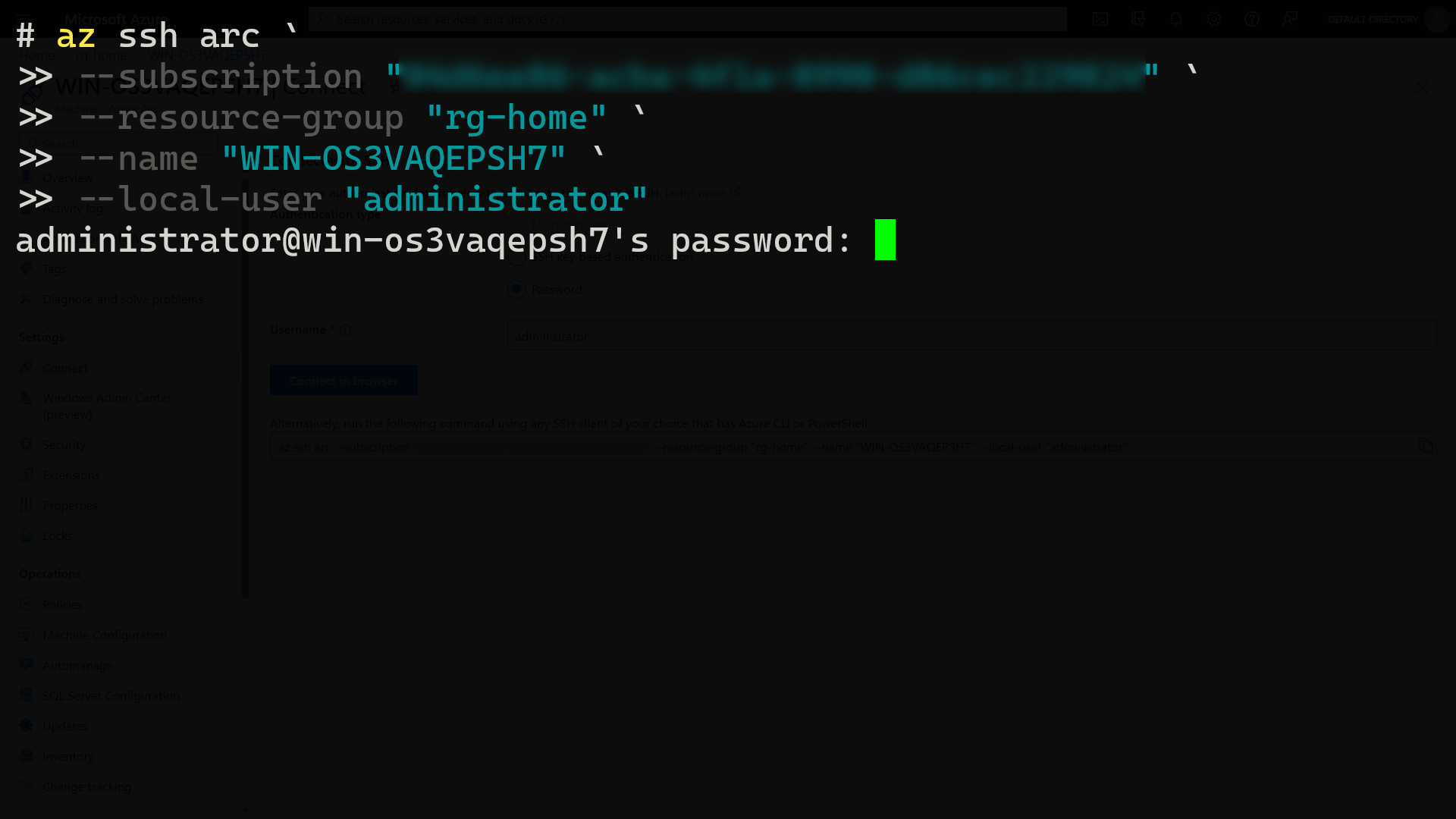

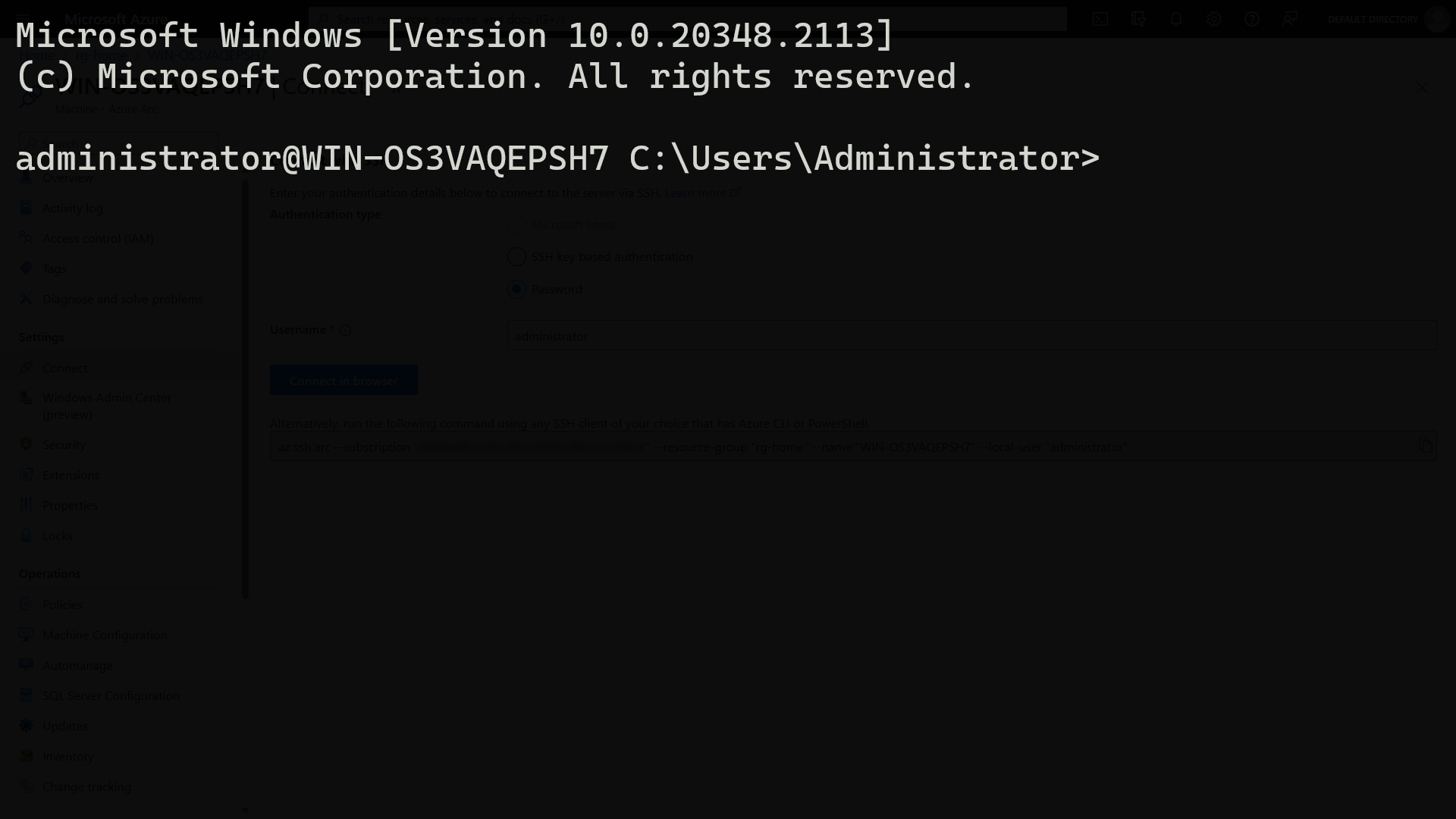

Or then you can connect from your local machine using Azure CLI:

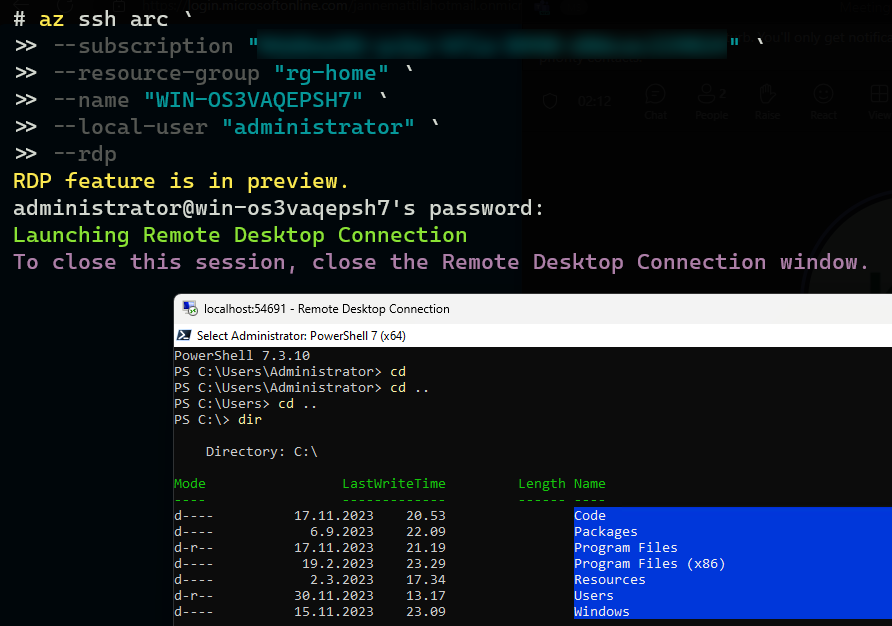

Or then you can use RDP using --rdp option:

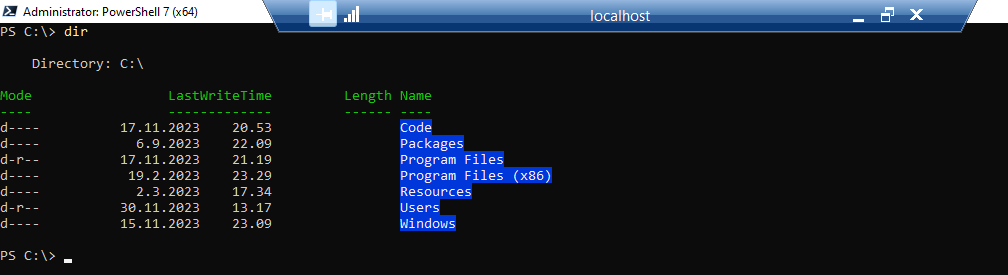

Then you can use the full RDP experience to connect to your machine:

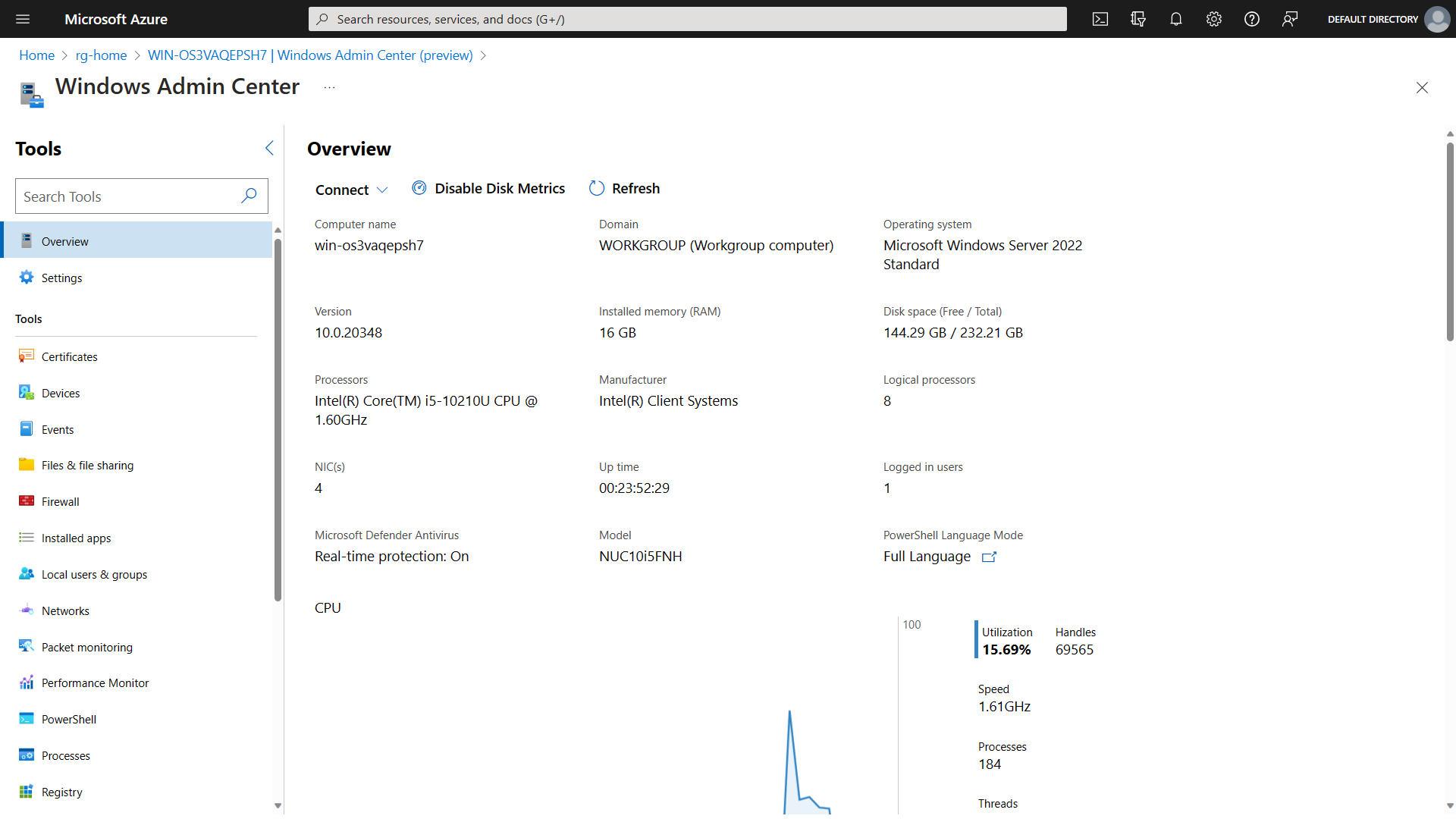

You can also use Windows Admin Center to connect to your Arc-enabled servers:

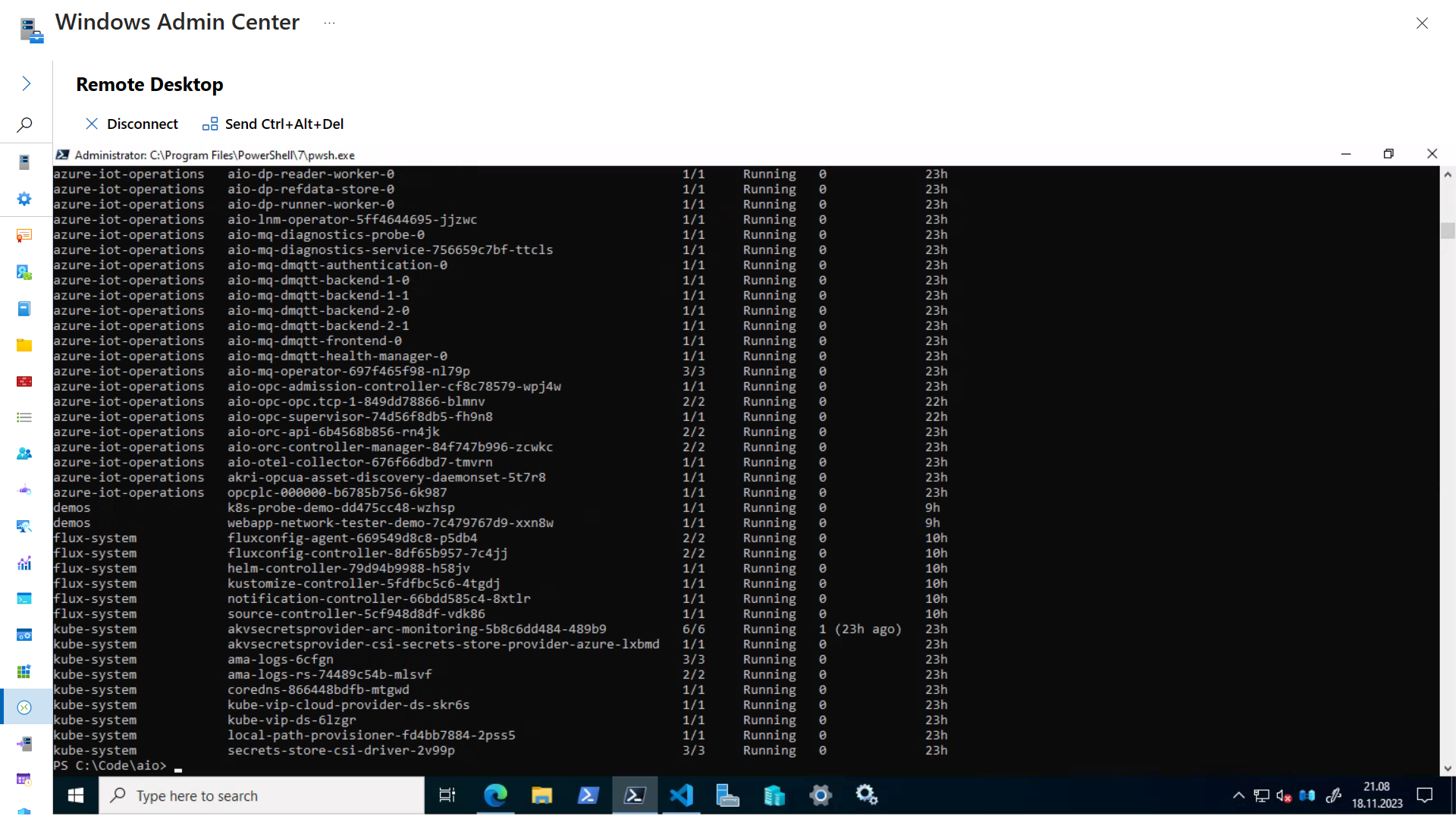

From Window Admin Center you can then use Remote Desktop for connecting to your machine:

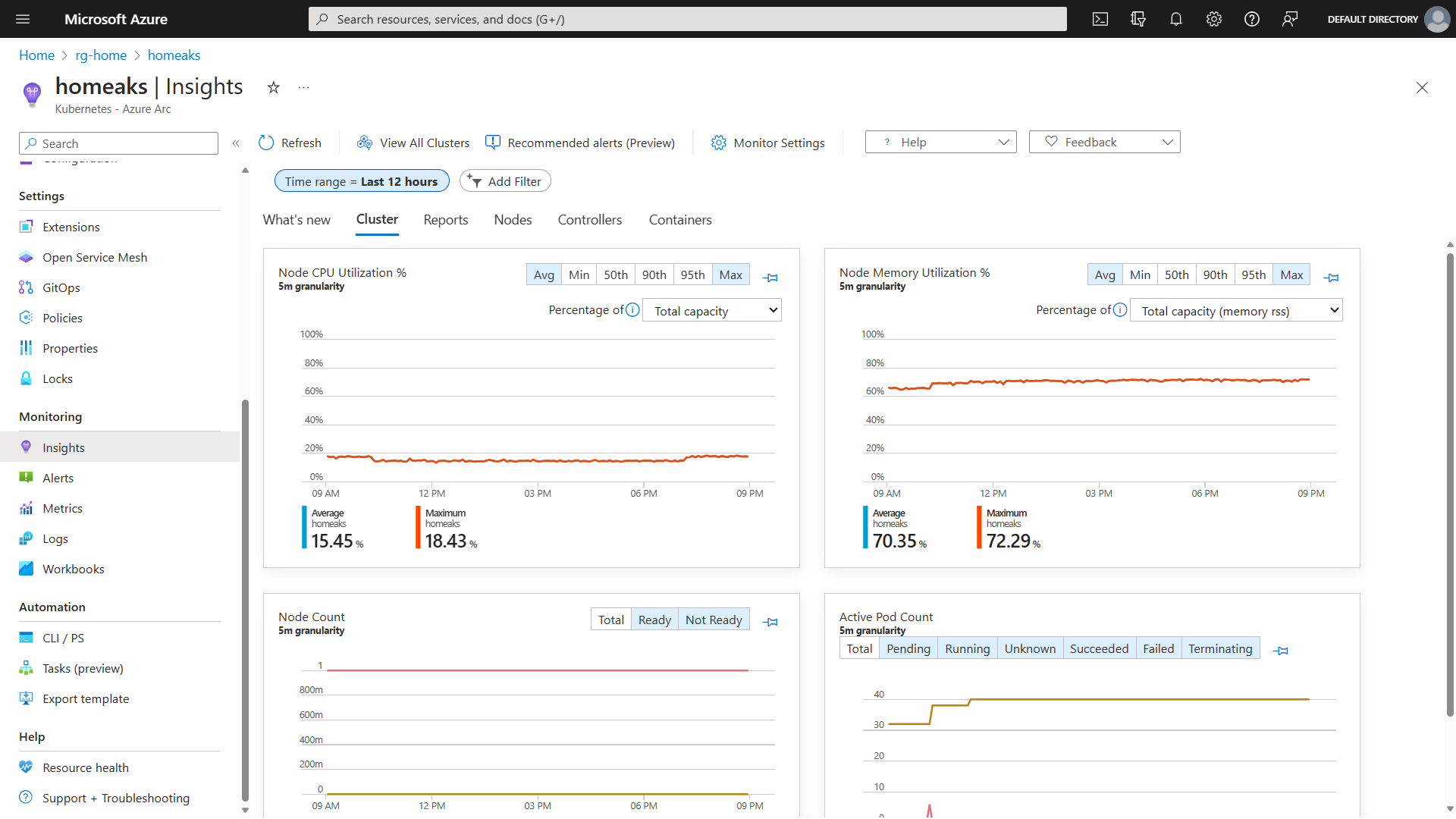

Azure Arc-enabled Kubernetes has tons of features, but I’ll just show two favorite ones of mine. Azure Monitor Container Insights for Azure Arc-enabled Kubernetes clusters is the first feature to highlight:

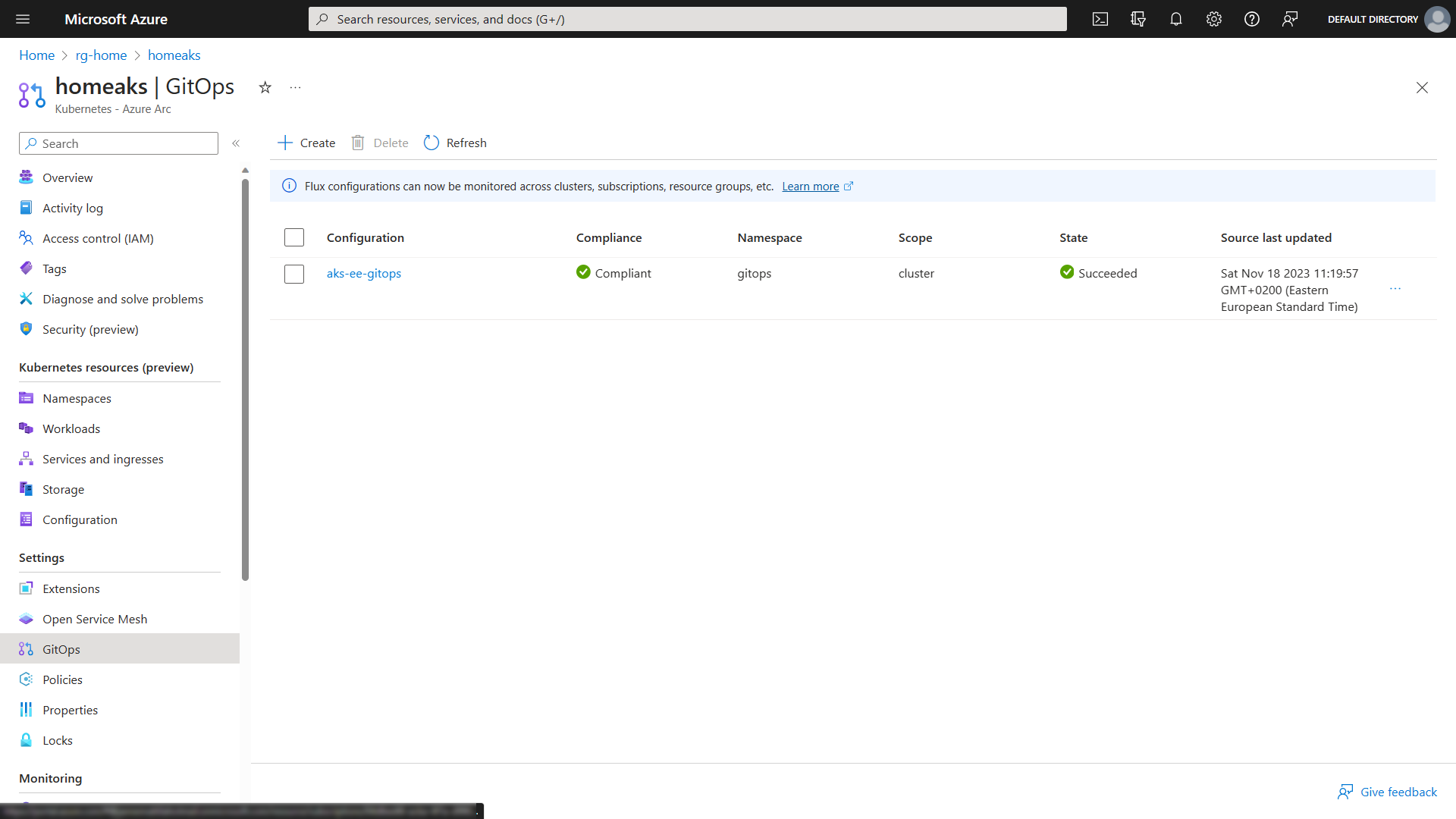

Second one is GitOps using Flux v2 for application deployment:

With the above configuration cluster pulls configuration from this GitHub repository and deploys it to the cluster automatically:

Azure IoT Operations (AIO)

Azure IoT Operations has its own portal:

https://iotoperations.azure.com

It displays all your AIO enabled clusters in the home view:

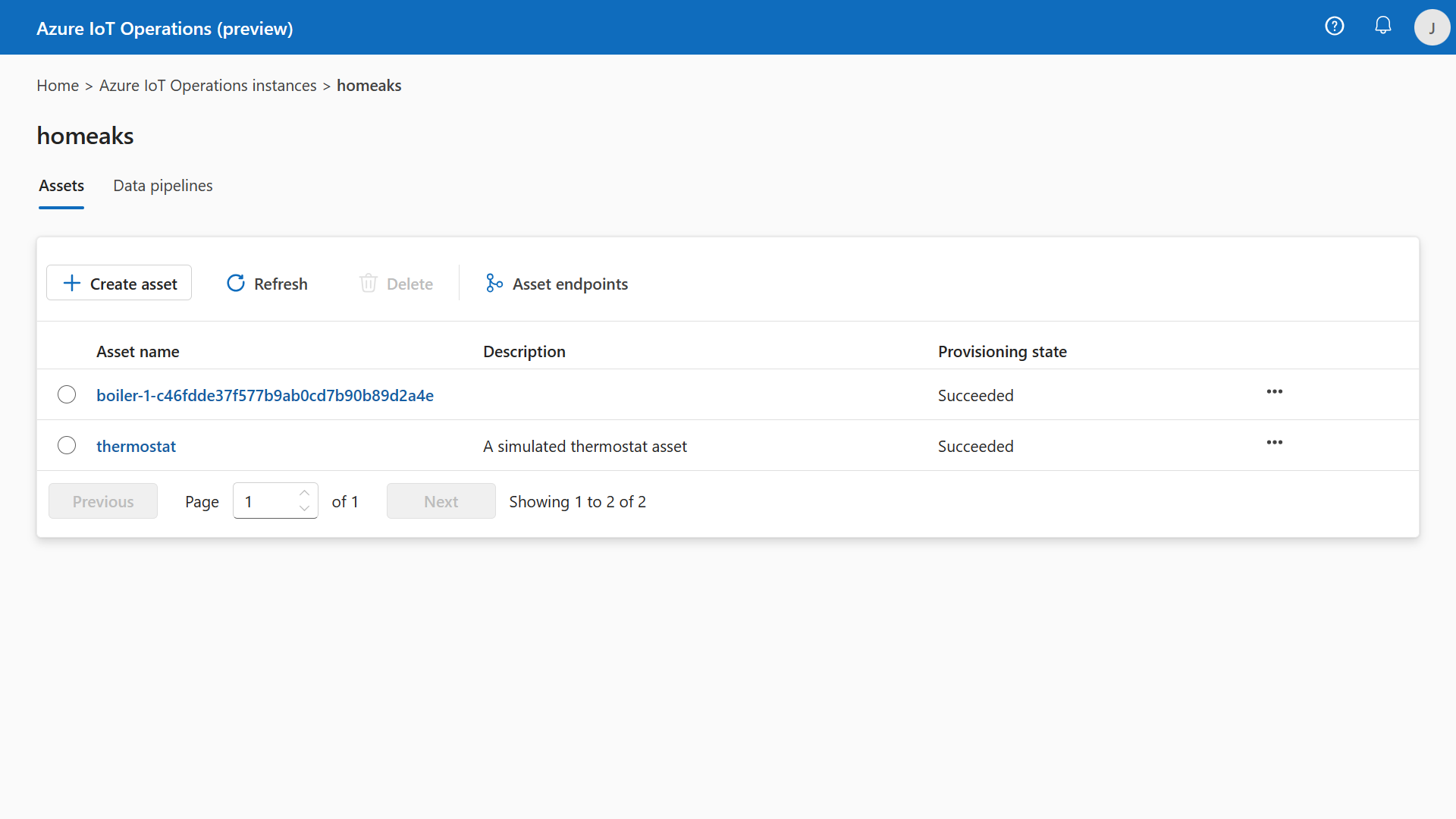

Opening my homeaks shows all the assets underneath it:

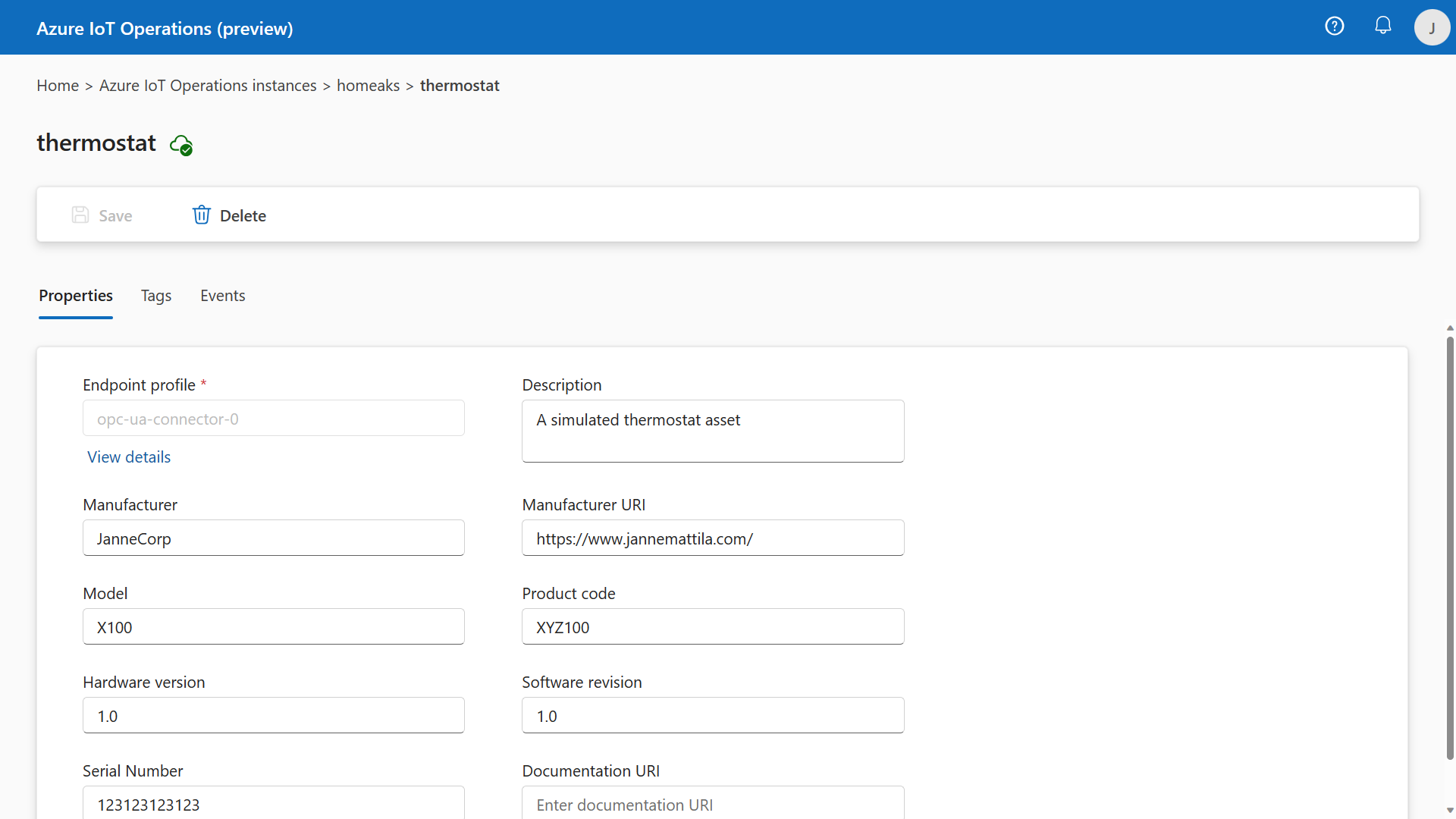

Thermostat properties:

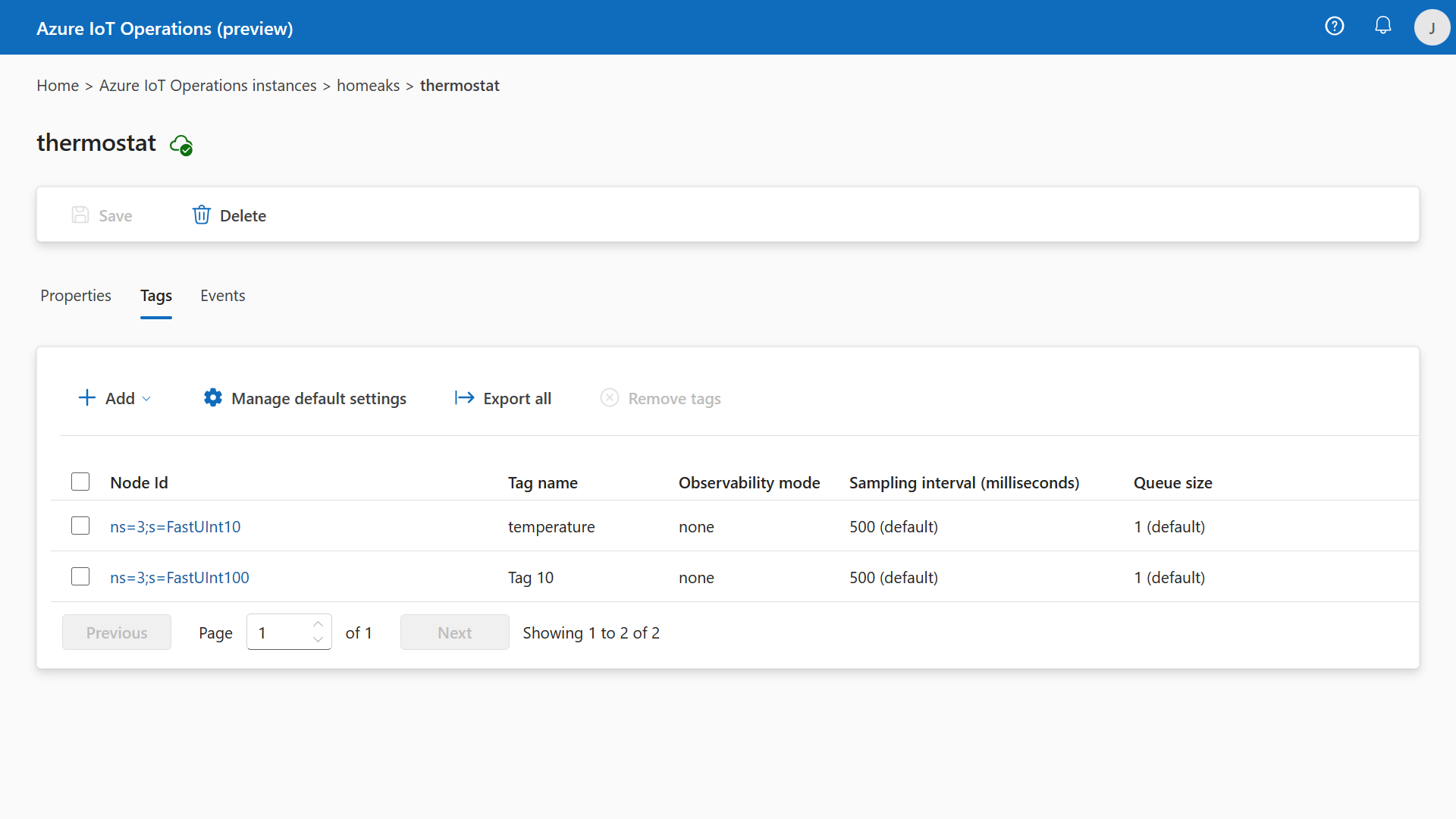

Thermostat tags:

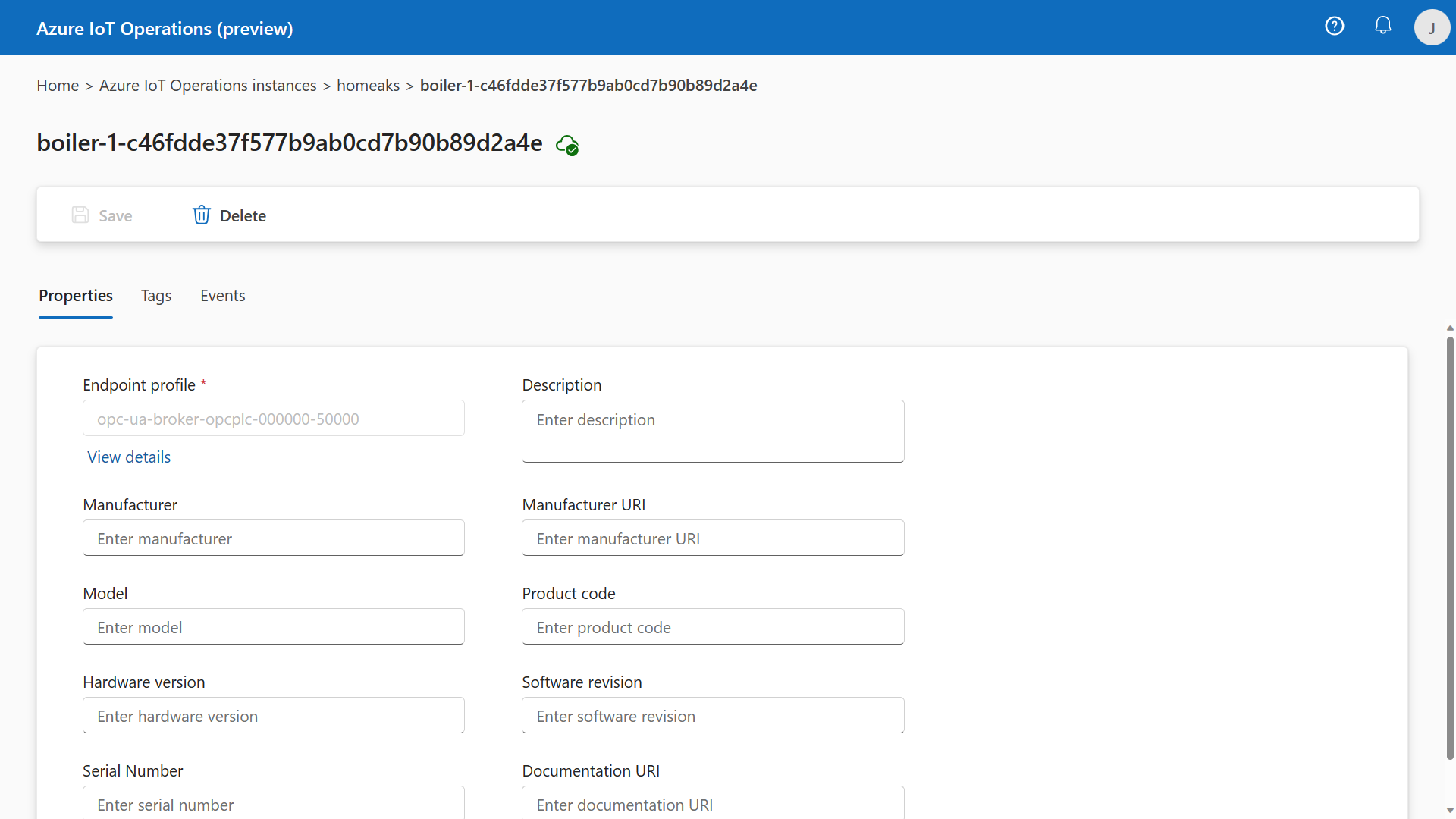

Boiler properties:

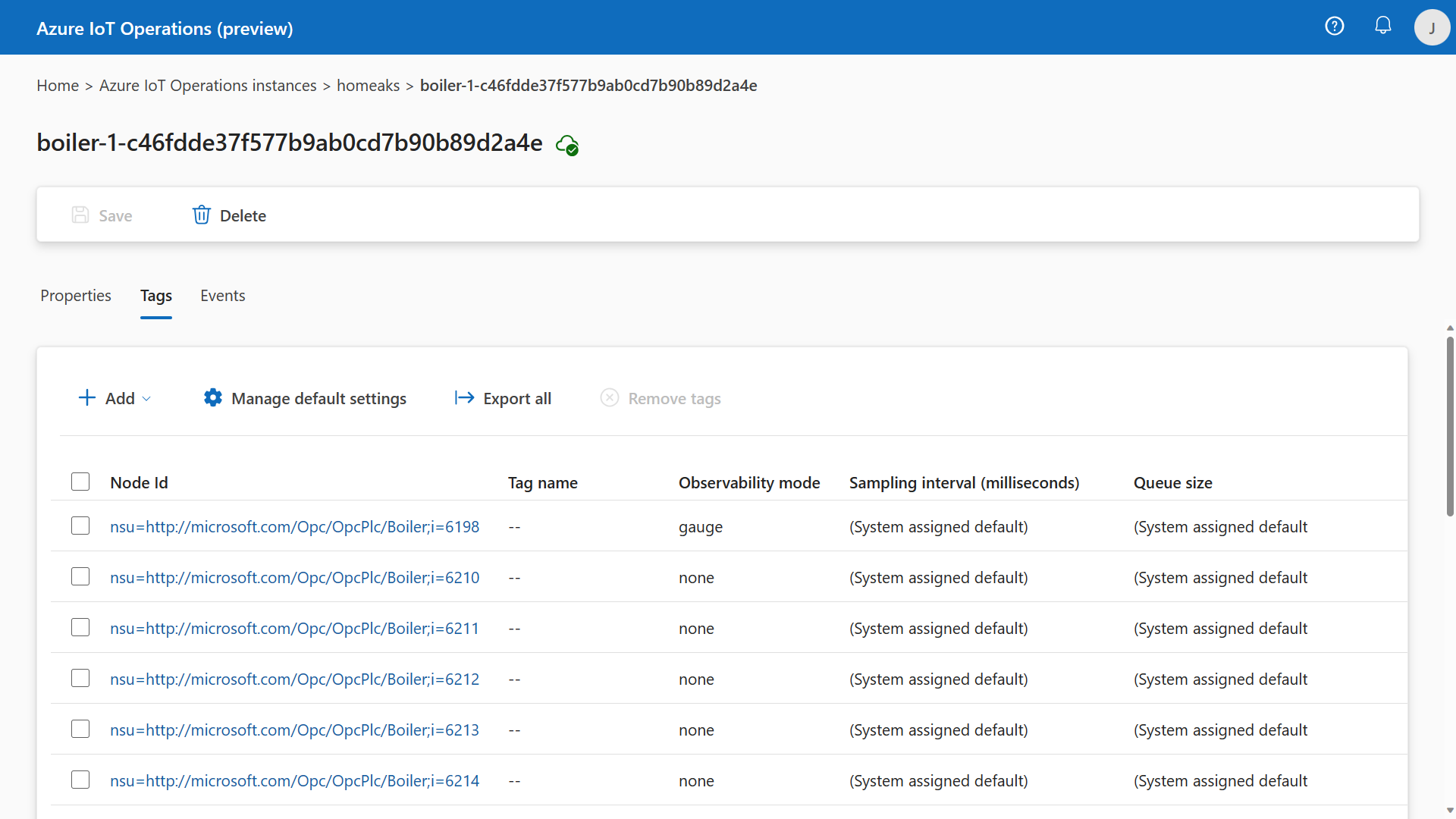

Boiler tags:

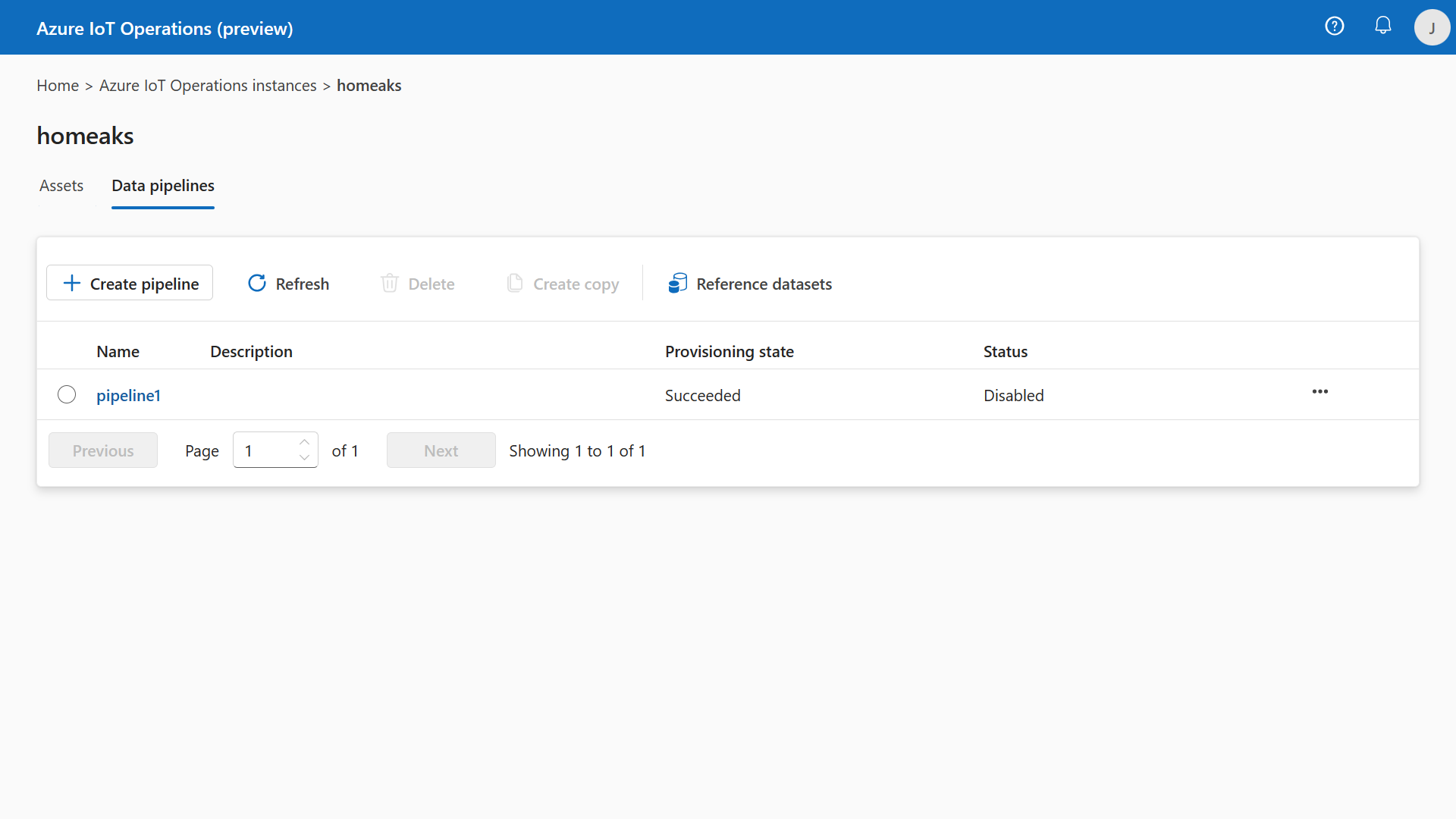

I can then manage Data pipelines that are then executed on the edge. I can do all this work from the same portal:

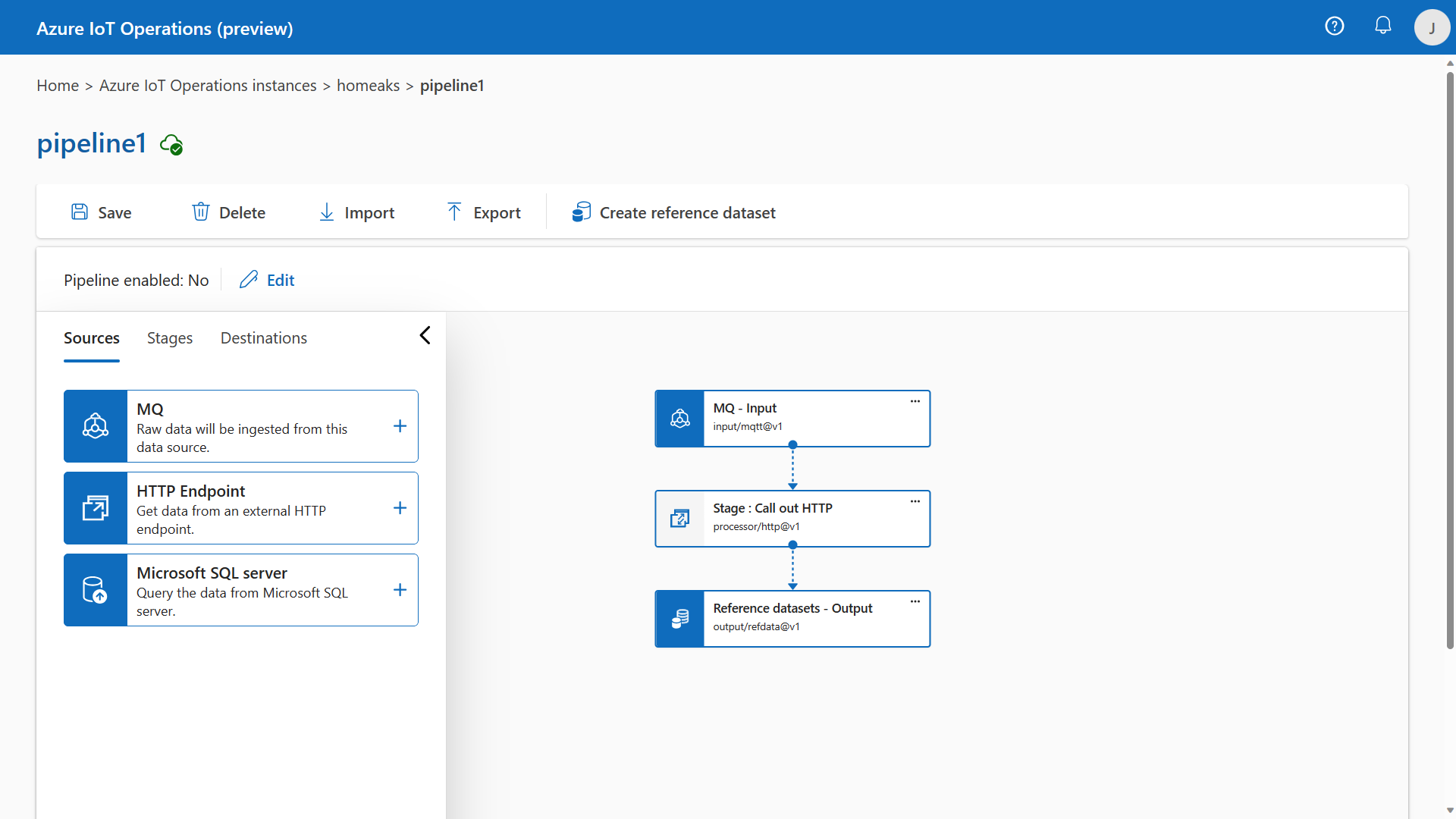

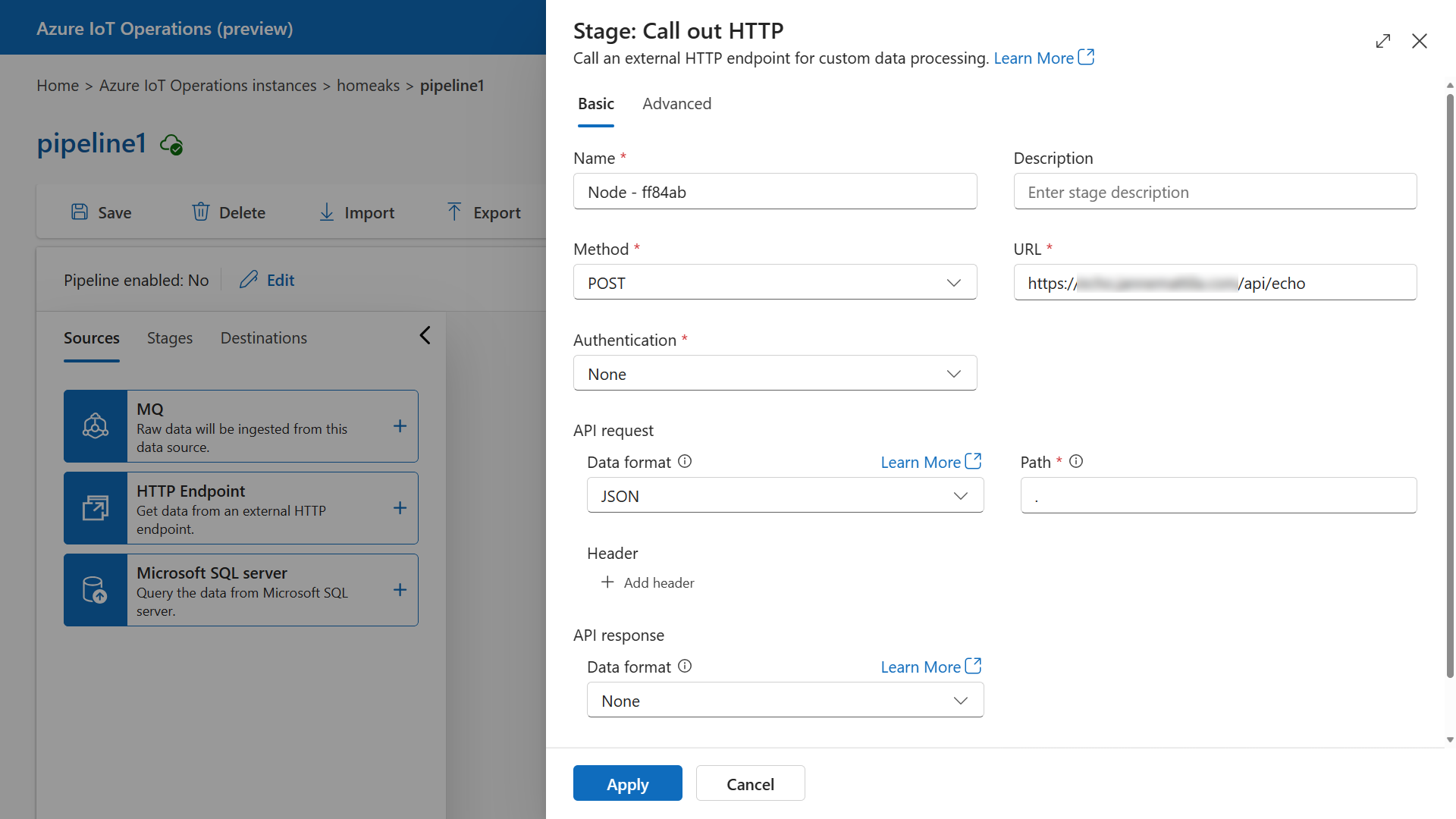

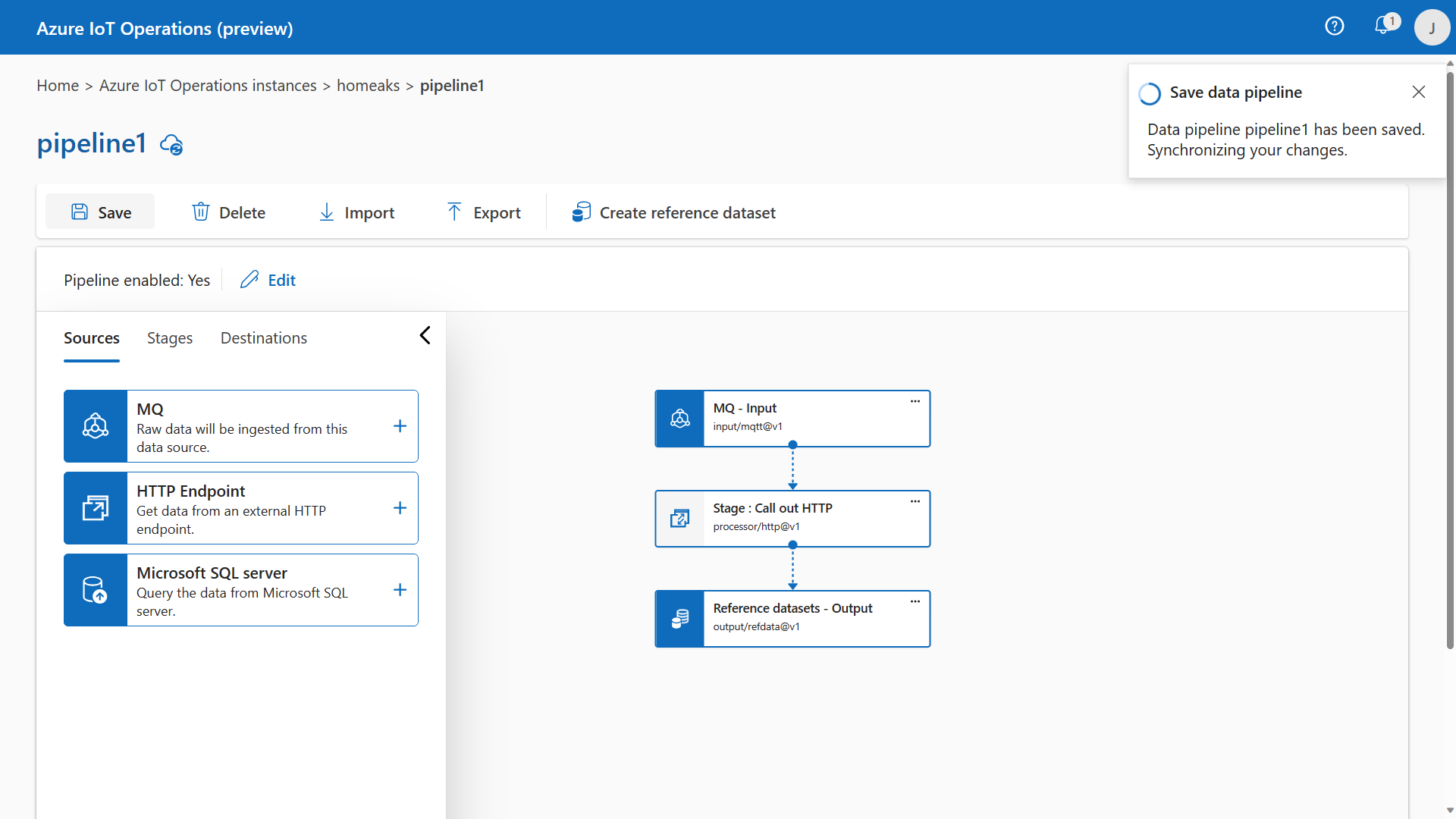

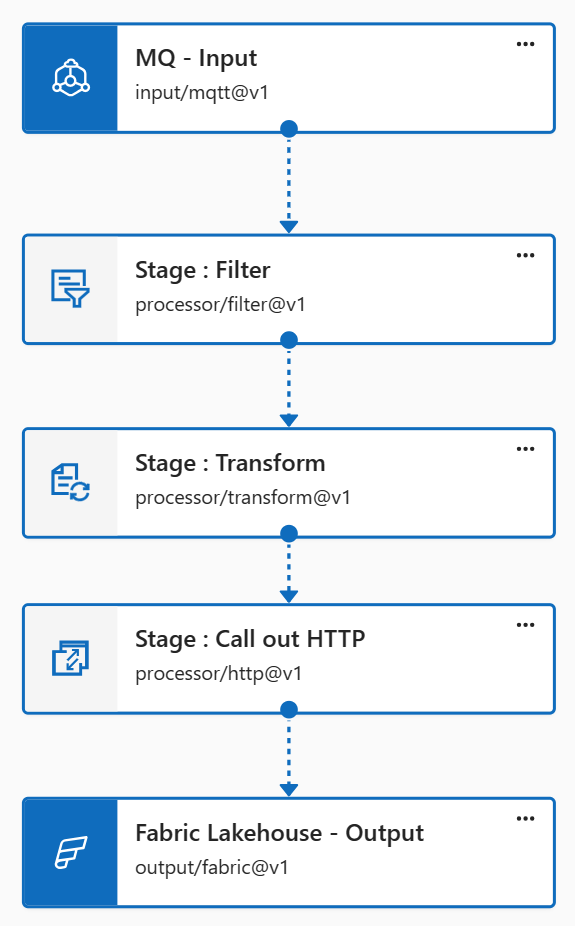

I’ve created the world’s most simple pipeline just to show how it works:

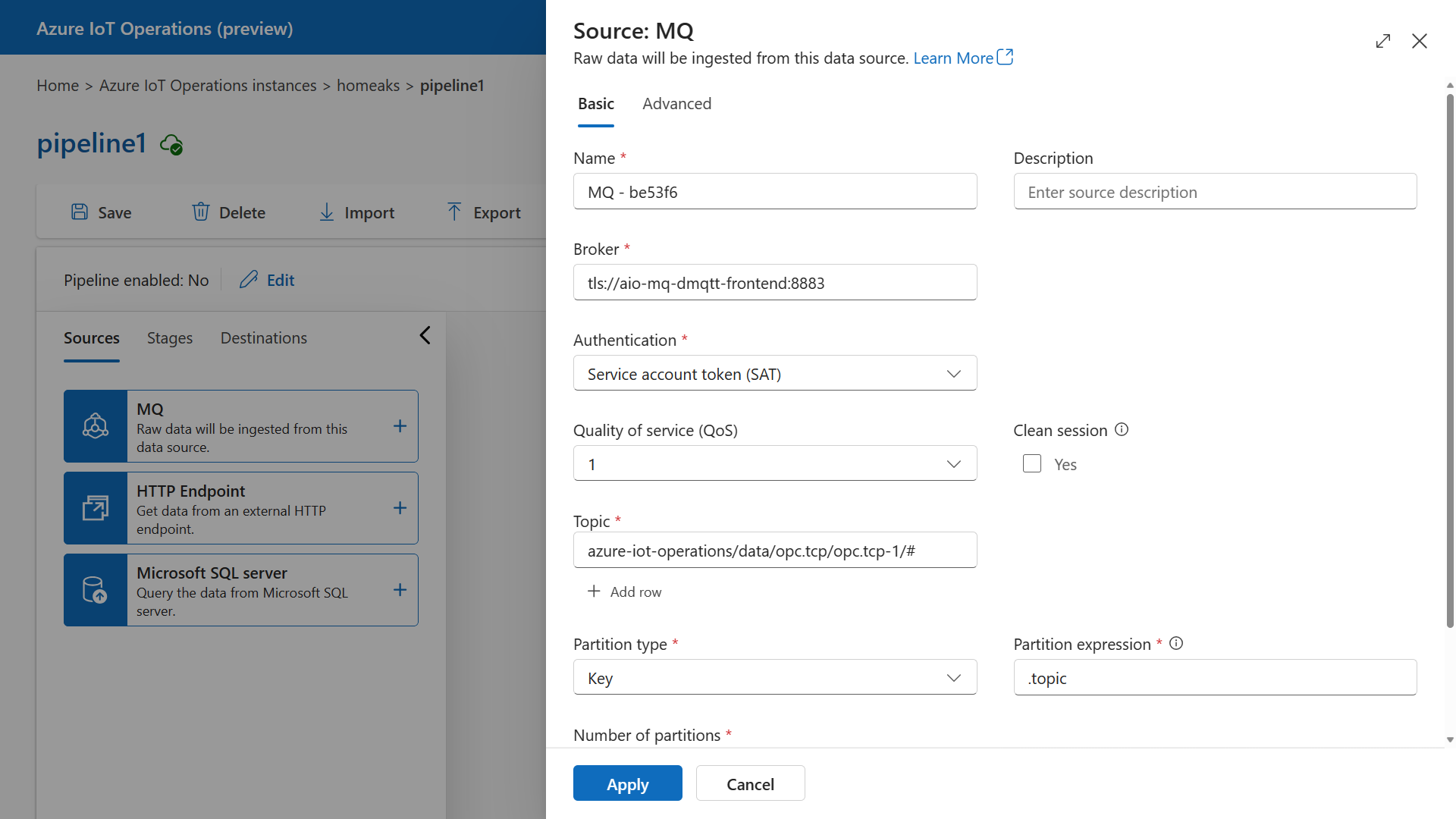

It takes messages from topics (note the MQTT Topic filter as # in the end):

For demo purposes, I’ll invoke external rest endpoint for each message so that I can see the message content and see how frequent messages are being sent:

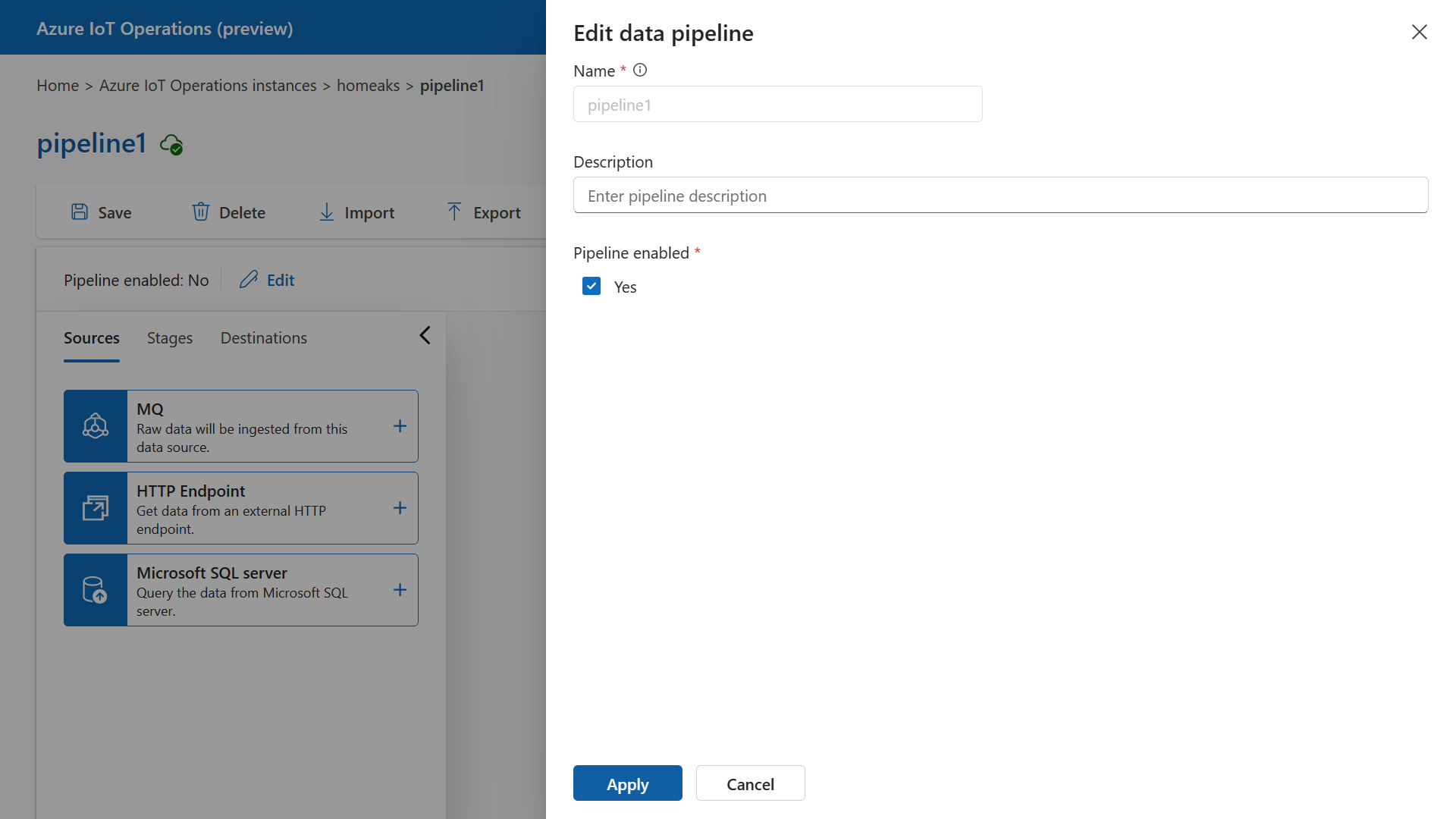

I can then enable that pipeline from the portal:

It’s then synchronized to the edge, and it gets executed there:

Now messages start to flow to my external endpoint, and I can capture them there. Here is one example message:

{

"payload": {

"dataSetWriterName": "thermostat",

"messageType": "ua-deltaframe",

"payload": {

"Tag 10": {

"SourceTimestamp": "2023-11-18T19:16:14.3165612Z",

"Value": 84854

},

"temperature": {

"SourceTimestamp": "2023-11-18T19:16:14.3164787Z",

"Value": 84854

}

},

"sequenceNumber": 69281,

"timestamp": "2023-11-18T19:16:14.6770589Z"

},

"properties": {

"contentType": "application/json",

"payloadFormat": 1

},

"qos": 0,

"systemProperties": {

"partitionId": 0,

"partitionKey": "azure-iot-operations/data/opc.tcp/opc.tcp-1/thermostat",

"timestamp": "2023-11-18T19:16:14.681Z"

},

"topic": "azure-iot-operations/data/opc.tcp/opc.tcp-1/thermostat",

"userProperties": [

{

"key": "mqtt-enqueue-time",

"value": "2023-11-18 19:16:14.678 +00:00"

},

{

"key": "uuid",

"value": "d52b7fbf-b98d-4105-82b2-7e1545397485"

},

{

"key": "externalAssetId",

"value": "d52b7fbf-b98d-4105-82b2-7e1545397485"

},

{

"key": "traceparent",

"value": "00-010ff7463fb00cdb8c8babadea229ecb-772f11e4996284b8-01"

}

]

}

Here is another example message:

{

"payload": {

"dataSetWriterName": "boiler-1-c46fdde37f577b9ab0cd7b90b89d2a4e",

"messageType": "ua-deltaframe",

"payload": {

"dtmi:microsoft:opcuabroker:Boiler__2:tlm_CurrentTemperature_6211;1": {

"SourceTimestamp": "2023-11-18T19:16:14.3122101Z",

"Value": 85

}

},

"sequenceNumber": 79112,

"timestamp": "2023-11-18T19:16:14.677051Z"

},

"properties": {

"contentType": "application/json",

"payloadFormat": 1

},

"qos": 0,

"systemProperties": {

"partitionId": 0,

"partitionKey": "azure-iot-operations/data/opc.tcp/opc.tcp-1/boiler-1-c46fdde37f577b9ab0cd7b90b89d2a4e",

"timestamp": "2023-11-18T19:16:14.681Z"

},

"topic": "azure-iot-operations/data/opc.tcp/opc.tcp-1/boiler-1-c46fdde37f577b9ab0cd7b90b89d2a4e",

"userProperties": [

{

"key": "mqtt-enqueue-time",

"value": "2023-11-18 19:16:14.677 +00:00"

},

{

"key": "traceparent",

"value": "00-232fabe6f2baa6ffd22fa0fd0677bbe5-9025710285afea84-01"

}

]

}

Those messages started flowing very fast, so the data processing pipeline works exactly as expected.

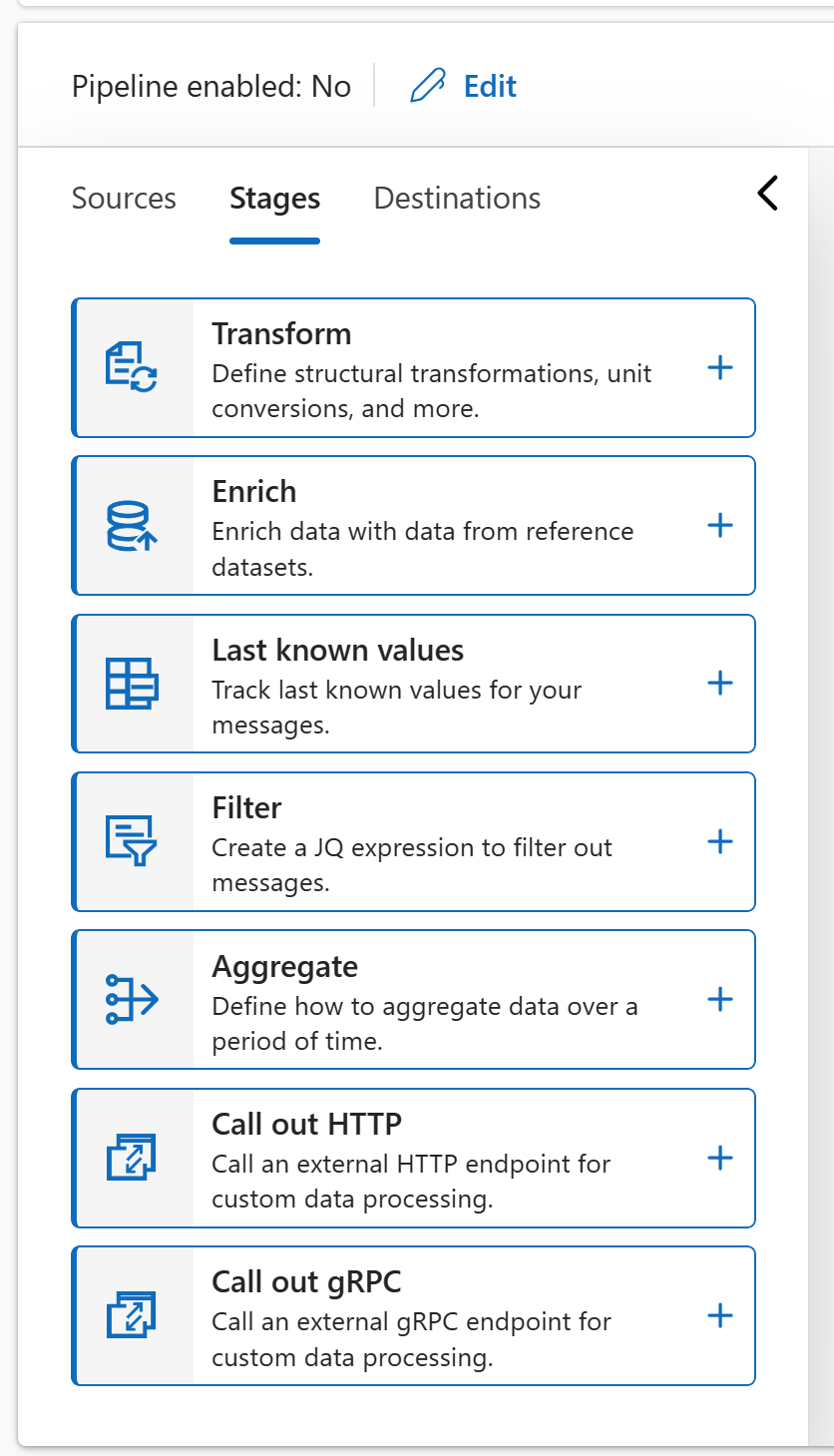

You can process the data on the edge using capabilities under Stages. You can use multiple steps in the data processing pipeline:

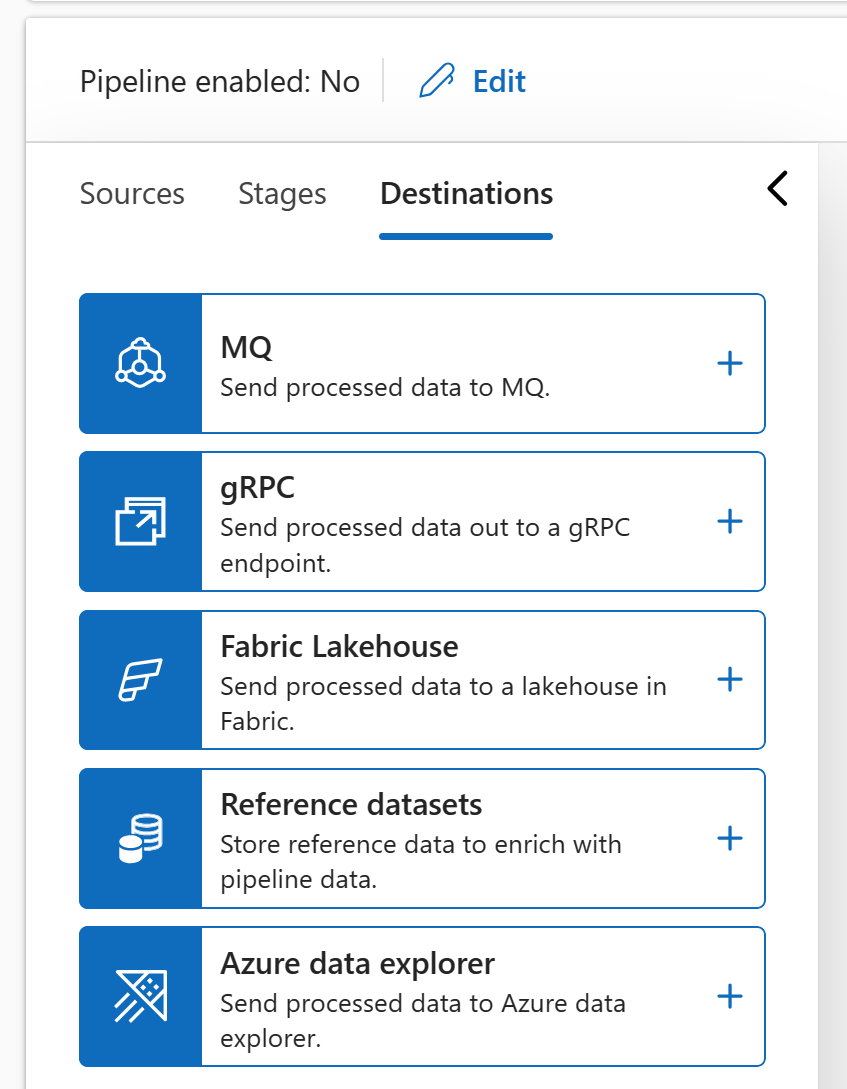

You can use Destinations to push your data to e.g., Microsoft Fabric:

So let’s enhance our above pipeline to this:

Filter stage only selects messages coming from thermostat:

.payload.dataSetWriterName == "thermostat"

Transform stage selects only temperature element:

.payload |= .payload.temperature

And I only send .payload to the external endpoint.

This way I get messages like this:

{

"SourceTimestamp": "2023-11-22T13:08:54.3203304Z",

"Value": 408414

}

All of this I managed to do directly from the Azure IoT Operations portal. Pretty powerful right?

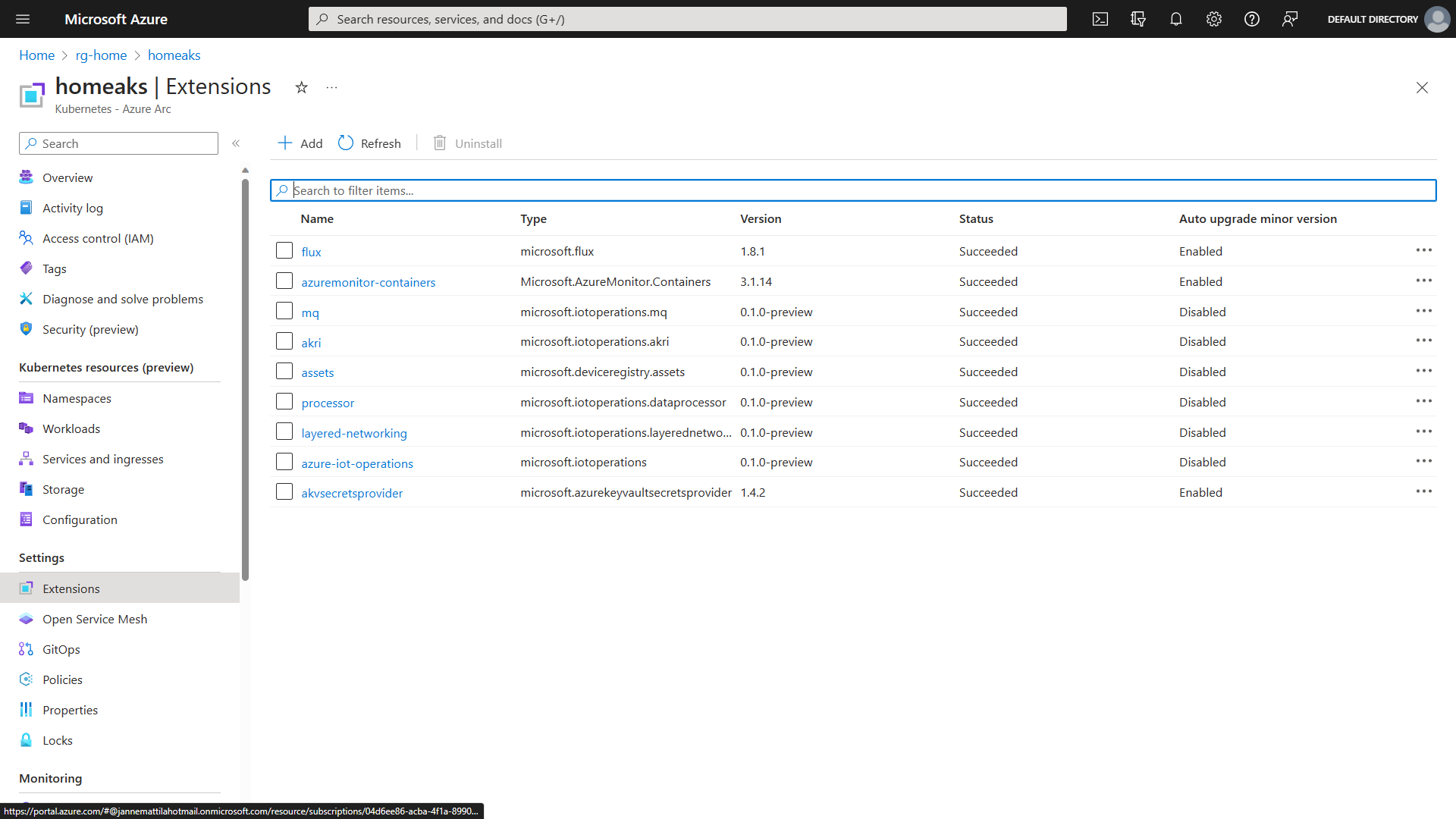

If you were wondering how Azure IoT Operations was deployed to the edge, then you can see it in the Kubernetes clusters extensions:

Summary

If you’re building an edge solution, then you should definitely check out Azure IoT Operations. Take it for a spin and let us know what you think about it.

I hope you find this useful!

JanneMattila/aks-ee-gitops

JanneMattila/aks-ee-gitops